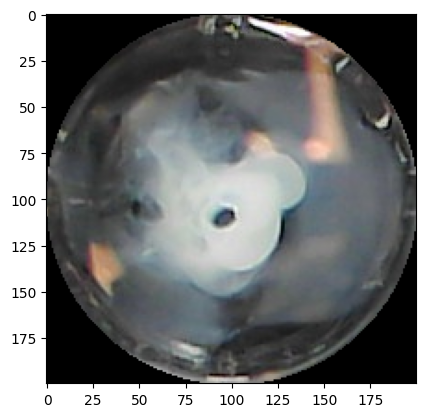

Image Pre-processing

def pre_process_image(image_path, contrast_factor=1.5):

# Load image

image = Image.open(image_path).convert("RGB")

# Increase contrast

enhancer = ImageEnhance.Contrast(image)

image = enhancer.enhance(contrast_factor)

np_image = np.array(image)

# Create a circular mask

height, width, _ = np_image.shape

center = (370, 305)

radius = 100

mask = Image.new("L", (width, height), 0)

draw = ImageDraw.Draw(mask)

draw.ellipse((center[0] - radius, center[1] - radius, center[0] + radius, center[1] + radius), fill=255)

# Apply the mask to the image

masked_image = Image.fromarray(np_image)

masked_image.putalpha(mask)

masked_image = np.array(masked_image)

# Crop the image to the diameter of the circle

left = center[0] - radius

top = center[1] - radius

right = center[0] + radius

bottom = center[1] + radius

masked_image = masked_image[top:bottom, left:right]

# Extract RGB channels and alpha channel

np_image_rgb = masked_image[:, :, :3]

alpha_channel = masked_image[:, :, 3]

# Create a black background

black_background = np.zeros_like(np_image_rgb)

# Combine the RGB image with the black background using the alpha channel

np_image_rgb = np.where(alpha_channel[..., None] == 0, black_background, np_image_rgb)

return np_image_rgbExample

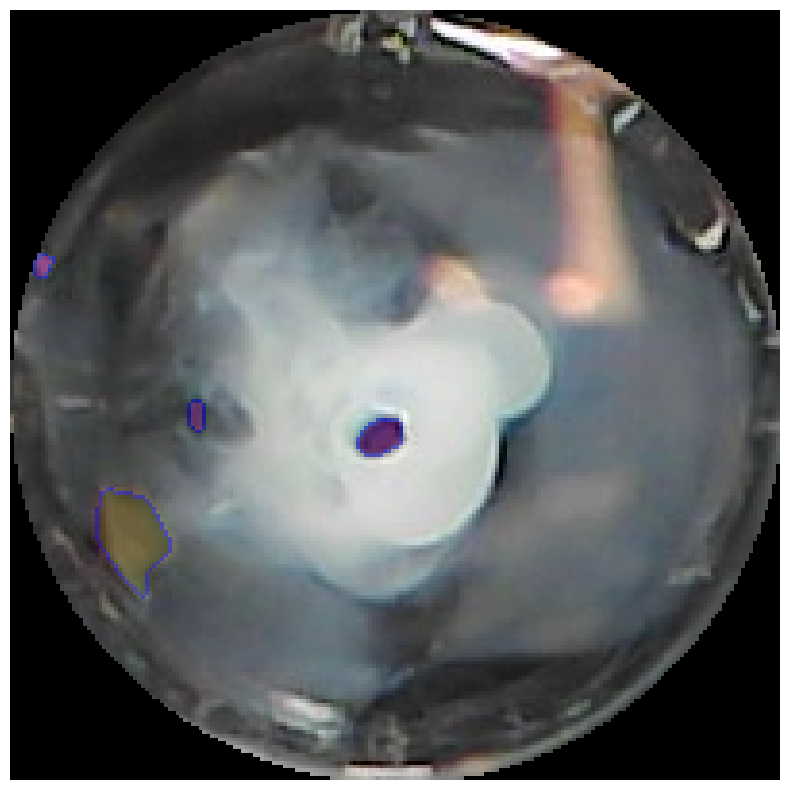

Still some limitations using SAM2

Example

Other Pre-processing steps tried that didn’t make much of a difference

- increasing contrast

- increasing brightness

- preliminary edge-detection

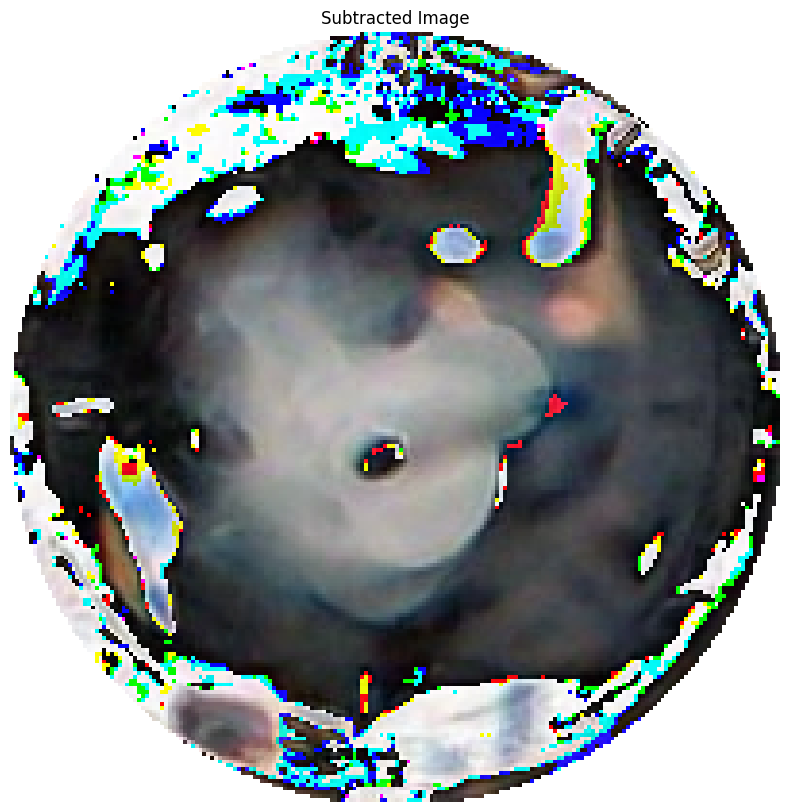

Pre-Image Subtraction

sub_image_filename = "../data/trial-1/10-A10_MQ_Ket_1_DS_PREIMAGE.jpg"

np_subtraction = pre_process_image(sub_image_filename, contrast_factor=1)

plt.imshow(np_subtraction)

# Ensure both images are of the same shape

if np_image.shape == np_subtraction.shape:

# Subtract np_image from np_subtraction

result_image = np_image[:, :, :3] - np_subtraction[:, :, :3]

result_image = np.dstack((result_image, np_image[:, :, 3]))

# Display the result

plt.figure(figsize=(10, 10))

plt.imshow(result_image)

plt.title("Subtracted Image")

plt.axis('off')

plt.show()

else:

print("The images are not of the same shape and cannot be subtracted.")Example

Looked really promising, it should be relatively easy for a model to learn given the current image and the pre-image

Testing a New Model: CV2_MOG2

Advantagous

- Can set background using learning rates

- Can ensure the background doesn’t update

- Uses videos/image sequences by default

Some Gifs

- Determine if some wells are under-performing

- Get a quick fit metric, well position

- Try to extract useful information now

- Higher cross-linking

- tighter packed

- brighter

- Don’t let this bias your conclusions

- Look for trends!